Plan for Today #

- Unit Testing

Unit Testing #

Bugs Happen! #

Complexity can have consequences!

- the crash of the Mars Climate Orbiter (1998),

- a failure of the national telephone network (1990),

- a deadly medical device (1985, 2000),

- a massive Northeastern blackout (2003),

- the Heartbleed, Goto Fail, Shellshock exploits (2012–2014),

- a 15-year-old fMRI analysis software bug that inflated significance levels (2015),

It is easy to write a thousand lines of research code, then discover that your results have been wrong for months.

A Common Workflow #

- Write some code

- Try it on some cases interactively

- Repeat until everything “seems fine”

Better than nothing but not reliable!

What can we do? #

- Good practices and design - always a good idea

- Proving Correctness - more feasible but still limited

- Testing - try to break the system, but systematically

Types of testing #

- unit testing

- tests focused on small, localized/modular pieces of the code unit tests are focal and loosely coupled (or independent when possible)

- generative testing

- randomly generates inputs based on specified logical constraints and properties and produces minimal inputs on failure

- integration testing

- test how system components fit together and interact

- end-to-end testing

- test a users entire workflow

- acceptance testing

- does the system meet its desired specifications?

- regression testing

- tests on system changes to check that nothing has broken

- top-down testing

- tests based on high-level requirements

We will focus on unit testing, but keep in mind that some of the boundaries are blurry (and these are not mutually exclusive categories).

Unit Testing #

Unit testing consists of writing tests that are

- focused on a small, low-level piece of code (a unit)

- typically written by the programmer with standard tools

- fast to run (so can be run often, i.e. before every commit).

A test is simply some code that calls the unit with some inputs and checks that its answer matches an expected output.

Many benefits, including:

- Exposes problems early

- Makes it easy to change (refactor) code without forgetting pieces or breaking things

- Simplifies integration of components

- Executable documentation of what the code should do

- Drives the design of new code.

It takes time to test but can save time in the end.

Testing Framework #

A test is a collection assertions executed in sequence, within a self-contained environment.

The test fails if any of those assertions fail.

Tests should be:

- focused on a single feature/function/operation

- well named so a failure gives you a precise pointer

- replicable/pure, returning consistent results if nothing changes

- isolated, with minimal dependencies

- clear/readable, so a failure is easy to interpret

- fast, encouraging lots of tests run frequently

test_that("Conway's rules are correct", {

# conway_rules(num_neighbors, alive?)

expect_true(conway_rules(3, FALSE))

expect_false(conway_rules(4, FALSE))

expect_true(conway_rules(2, TRUE))

...

})

(This uses the testthat package for R, available from CRAN.)

A test suite is a collection of related tests in a common context.

If a test suite needs to prepare a common environment for each test and maybe clean up after each test, we create a fixture.

Examples: data set, database connection, configuration files

It is common to mock these resources – “stunt doubles” that mimic the structure of the real thing while doing the test.

Test Runners #

There is a wide variety of test runners for each language and a lot of sharing of ideas between them.

| Language | Recommended |

|---|---|

| R | testthat |

| Python | pytest, Hypothesis |

| JavaScript | Jest |

| Clojure | clojure.test, test.check |

| Java | JUnit |

| Haskell | HUnit, Quickcheck |

| … | |

| General | Cucumber |

Test runners accept assertions in different forms and produce a variety of customizable reports.

Here’s a simple test from pytest

# in main_code.py

def add1(x):

return x + 1

def volatile():

raise SystemExit(1)

# in test_sample.py

import pytest

from main_code import add1, volatile

def test_incr():

assert add1(3) == 4

def test_except():

with pytest.raises(SystemExit):

volatile()

Here’s a report from Python’s builtin unittest:

$ python test/trees_test.py -v

test_crime_counts (__main__.datatreetest)

ensure ks are consistent with num_points. ... ok

test_indices_sorted (__main__.datatreetest)

ensure all node indices are sorted in increasing order. ... ok

test_no_bbox_overlap (__main__.datatreetest)

check that child bounding boxes do not overlap. ... ok

test_node_counts (__main__.datatreetest)

ensure that each node's point count is accurate. ... ok

test_oversized_leaf (__main__.datatreetest)

don't recurse infinitely on duplicate points. ... ok

test_split_parity (__main__.datatreetest)

check that each tree level has the right split axis. ... ok

test_trange_contained (__main__.datatreetest)

check that child tranges are contained in parent tranges. ... ok

test_no_bbox_overlap (__main__.querytreetest)

check that child bounding boxes do not overlap. ... ok

test_node_counts (__main__.querytreetest)

ensure that each node's point count is accurate. ... ok

test_oversized_leaf (__main__.querytreetest)

don't recurse infinitely on duplicate points. ... ok

test_split_parity (__main__.querytreetest)

check that each tree level has the right split axis. ... ok

test_trange_contained (__main__.querytreetest)

check that child tranges are contained in parent tranges. ... ok

----------------------------------------------------------------------

ran 12 tests in 23.932s

ok

Organization #

Tests are often kept in separate directories in files named for

automatic discovery (e.g., test_foo.py).

You can run individual tests, all of them, or filter by name patterns.

test_dir("tests/")

Some guiding principles:

- Keep tests in separate files from the code they test.

- Give tests good names. (Clearer?

test_1vs. =test_tree_insert) - Make tests replicable. If a test fails with random data, how do you know what went wrong?

- Make your bugs into tests

- Use separate tests for unrelated assertions

- Test before every commit

For some tutorial guidance in Python and R testing, see Reference Notes Tutorial.

Big Issue #1: What To Test #

Core principle: tests should pass for correct functions but not incorrect functions

Seems simple enough but deeper than it might appear:

test_that("Addition is commutative", {

expect_equal(add(1, 3), add(3, 1))

})

# This passes too

add <- function(a, b) {

return(4)

}

# This too!

add <- function(a, b) {

return(a * b)

}

Guidelines:

- Test several specific inputs for which you know the correct answer

- Test “edge” cases, like a list of size zero or size eleventy billion

- Test special cases that the function must handle, but which you might forget about months from now

- Test error cases that should throw an error instead of returning an invalid answer

- Test any previous bugs you’ve fixed, so those bugs never return.

- Try to cover all the various branches in your code

Scenario 1. Find the maximum sum of a subsequence #

Function name: max_sub_sum(arr: Array<Number>) -> Number

max_sub_sum([]) = 0

max_sub_sum([x]) = x

max_sub_sum([1, -4, 4, 2, -2, 5]) = 9

In general, max_sub_sum return the maximum sum found in any

contiguous subvector of the input.

Finding the algorithm for this is fun – as we will see later –

but for now, how do we test it? (See the max-sub-sum exercise.)

Scenario 2. Create a half-space for a given vector #

Function: half_space_of(point: Array<Number>) -> (Array<Number> -> boolean)

Given a point in R^n, return a boolean function that tests whether a new point of the same dimension is in the positive half-space of the original vector.

foo <- half_space_of(c(2, 2))

foo(c(1, 1)) == TRUE

Scenario 3. What’s the closest pair of points? #

Function name: closest_pair(points)

Given a set of points in the 2D plane, find the pair of points which are the closest together, out of all possible pairs.

Later we will learn a good algorithm, using dynamic programming, to solve this without comparing all possible pairs. For now, let’s think of tests.

Question: are there properties of closest_pair that can be tested? Could we

generate random data and test that these properties hold?

- Test Ideas?

Scenario 4. Game of Life #

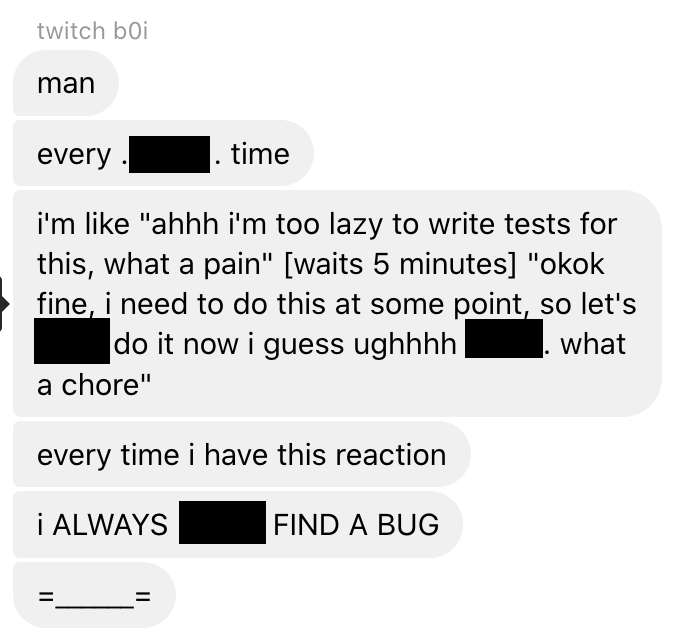

Big Issue #2: Test-Enhanced Development #

Test Driven Development (TDD) #

Test Driven Development (TDD) uses a short development cycle for each new feature or component:

- Write tests that specify the component’s desired behavior. The tests will initially fail as the component does not yet exist.

- Create the minimal implementation that passes the test.

- Refactor the code to meet design standards, running the tests with each change to ensure correctness.

Why work this way?

- Writing the tests may help you realize what arguments the function must take, what other data it needs, and what kinds of errors it needs to handle.

- The tests define a specific plan for what the function must do.

- You will catch bugs at the beginning instead of at the end (or never).

- Testing is part of design, instead of a lame afterthought you dread doing.

Try it. We will expect to see tests with your homework anyway, so you might as well write the tests first!

Behavior Driven Development (BDD) #

Software is designed, implemented, and tested with a focus on the behavior a user expects to experience when interacting with it.

Resources #

For whatever language you use, there is likely already a unit testing framework which makes testing easy to do. No excuses!

R #

See the Testing chapter from Hadley Wickham’s R Packages book for examples

using testthat.

Python #

- pytest (more ergonomic, recommended)

- unittest (built-in, nice)

- Hypothesis (generative testing)

Java #

- JUnit (the original)

Clojure #

- clojure.test (built in)

- midje (excellent!)

- test.check (generative)

JavaScript #

C++ #

- Boost.Test

- CppUnit

- Catch

- lest (>= C++11)

Haskell #

- HUnit

- QuickCheck (generative)